collectives/python/solution/broadcast.py

0 → 100644

collectives/python/solution/gatherv.py

0 → 100644

collectives/python/solution/scatter.py

0 → 100644

datatypes/LICENSE.txt

0 → 100644

datatypes/README.md

0 → 100644

datatypes/c/custom_type_a-b.c

0 → 100644

datatypes/c/solution/custom_type_a.c

0 → 100644

datatypes/c/solution/custom_type_b.c

0 → 100644

datatypes/c/solution/struct_type_c.c

0 → 100644

datatypes/c/solution/struct_type_d.c

0 → 100644

datatypes/c/struct_type.c

0 → 100644

datatypes/fortran/custom_type_a-b.F90

0 → 100644

datatypes/fortran/solution/custom_type_a.F90

0 → 100644

datatypes/fortran/solution/custom_type_b.F90

0 → 100644

datatypes/fortran/solution/struct_type_c.F90

0 → 100644

datatypes/fortran/solution/struct_type_d.F90

0 → 100644

datatypes/fortran/struct_type.F90

0 → 100644

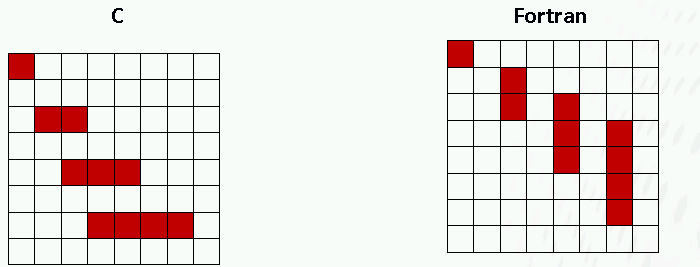

datatypes/img/indexed.png

0 → 100644

7.19 KiB

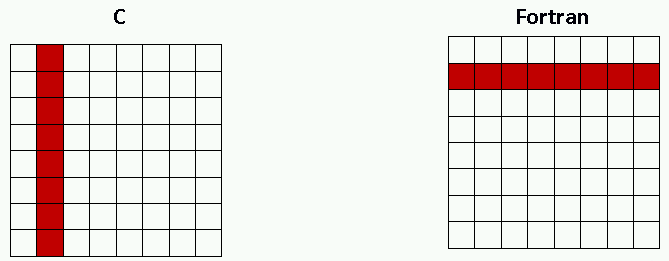

datatypes/img/vector.png

0 → 100644

2.26 KiB

datatypes/python/skeleton.py

0 → 100644